React + Performance = ?

React is very popular at the moment, and I can see why: its developer ergonomics are very attractive. JSX and vDOM are really nice to work with, and it certainly enables composability. But, being the performance-minded person that I am, I wanted to test the claims that it's default-fast.

Update 7/3/15: I failed to use the minified version of React in the test, which is the production version. Without that React's performance characteristics are worse. This was my (unwitting) mistake, and I apologise. I have since re-run the test, updated the graphs and any commentary. The good news is that some of the numbers did come down (yay!), but not by enough for my conclusions to change.

Time to have a play with React, I thought. And so I set about having a noodle, and, looking over the docs, I noticed this little nugget:

You may be thinking that it's expensive to change data if there are a large number of nodes under an owner. The good news is that JavaScript is fast and render() methods tend to be quite simple, so in most applications this is extremely fast. Additionally, the bottleneck is almost always the DOM mutation and not JS execution. React will optimize this for you using batching and change detection.

That feels like something that needs putting to the test...

What's a fair test here, guv? #

I figure that a modern app is likely to have some kind of infinite list, because feeds are pretty common.

I know that React's performance has been compared to that of other frameworks, like Angular. What I wanted to do was my own test of it against plain old vanilla JavaScript. (From this point on if I mention "Vanilla", that's what I mean: plain old unflavoured JavaScript that, in this case, is written by me.)

The docs claim that JavaScript is fast, and it's meddling with the DOM that's slow. So for that to be true we should be largely able to switch out React with something else and see pretty similar performance characteristics. Disclaimer: I have a bias that that's not true (though maybe I misunderstood the claim). It strikes me that even if JavaScript itself is fast, there are two trees that React is going to have to diff to make some patches, and therefore going direct will be faster, provided I don't trigger layout thrashing or some other shocker.

In any case the question is not "Is React faster than Vanilla?", so much as it is about how much extra time React is going to take to run, and thereby affect the user experience. In short: is the abstraction worth the cost?

For my test I decided to use something with a large number of elements, since that was also mentioned in the quote above. I figure that a modern app is likely to have some kind of infinite list, because feeds are pretty common. Whether you're Twitter, Hacker News, Spotify, Facebook, or, well, mostly anyone, an ever-growing list of items for your users to tap on seems de rigueur, and who am I if not a majority panderererer.

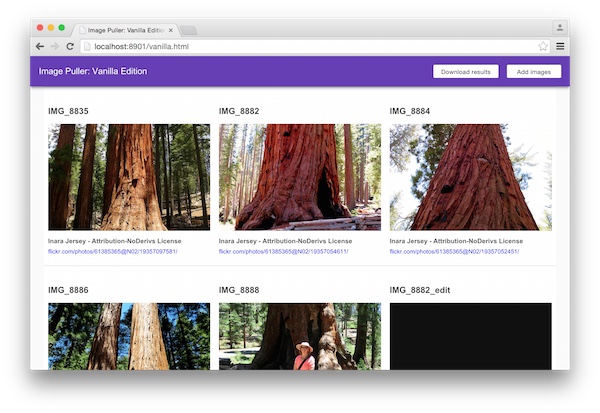

I ended up making a page that would pull images from the Flickr API, and essentially build an infinite list.

I ended up making a page that would pull images from the Flickr API, and essentially build an infinite list. Each time you click on the button in the top right corner I fetch some more photos, and I append those to the page. I have two variants: a React one and a Vanilla did-it-myself-in-JS-directly one, the general idea being to see what the performance difference is in terms of adjusting the page's DOM as the data set grows.

Ergonomics #

In React, updating the page is really nice; you alter its state object and it will figure out what changed and write to the DOM accordingly:

var FlickrImages = React.createClass({

loadImagesFromFlickr: function () {

var onFlickr = function (results) {

// Just update the state and React

// does the rest. I like this mucho.

this.setState({

data: this.state.data.concat(results)

});

}

flickr.search('tree', 100)

.then(onFlickr.bind(this));

},

getInitialState: function () {

return {data: []};

},

componentDidMount: function () {

// Set up the component.

},

render: function() {

// The DOM we need... the DOM we deserve?

}

});The vanilla version is lightweight enough, and it looks a little like this:

flickr.search('tree', 100)

.then(onFlickr);

function onFlickr (images) {

var fragment = document.createDocumentFragment();

images.forEach(function(image) {

// Check if we can skip this element...

var imageInDOM =

document.getElementById('image-' + image.id);

if (!imageInDOM) {

// Create a bunch of DOM nodes...

var newFlickrImage = document.createElement('div');

// etc...

// Then append them to the fragment.

fragment.appendChild(newFlickrImage);

}

});

// Append the fragment to the list.

flickrList.appendChild(fragment);

}So for sure the ergonomics of React are excellent. If you have a lot of subtle DOM changes, it's extremely helpful that it will catch them without you having to worry. In this case (and I wonder how many others) there's not much to capture really, so it's a hammer to crack a nut.

Results, please! #

I ran the tests on both my MacBook Pro and my Nexus 5, using Chrome stable (currently 43). Both devices are at the upper end of the capability range, so it's likely that the trend and not the actual values that apply more broadly.

I used window.performance.now() either side of the JavaScript of note (ignoring the network requests, for example) to capture the amount of time spent processing the DOM changes, and I also set a requestAnimationFrame so I could measure to see how long it took for the next frame to land. It's only rough, but by marking the start of the next frame, we get a sense of how long it took for the page to render (styles, layout, paint, etc) once React or my Vanilla version finished.

Desktop: React #

Here I'm using React to add 100 Flickr photos at a time (~5 DOM elements per photo factoring in the name, link and so on), starting at 100 and heading up to 1,200.

What surprised me was that the time taken for 100 pictures (around 500 elements) was 60ms on my MacBook Pro.

There's a fairly flat graph here. I know from running this test a bunch that there is a slow trend upwards, but you have to get to some seriously large DOM sizes for it to matter. That said, I do have a pretty hefty MacBook Pro, so the CPU is probably churning through at a monster rate.

What did surprise me, though, was that the time taken to compare the DOM with 100 pictures (around 500 elements) was 60ms on my MacBook Pro. Even running the test a bunch of times, the best I could get was around 45ms. That's locking up the main thread, so if I have any work to do at all when React is comparing trees, I'm all out of luck.

Other thing of note: the JavaScript time is going up, but the time to actually manipulate the DOM (Total time minus JavaScript time) remains steady at ~10ms, which implies that we're not doing much to trouble Blink. That's as it should be, since React is supposed to keep my DOM changes to a minimum.

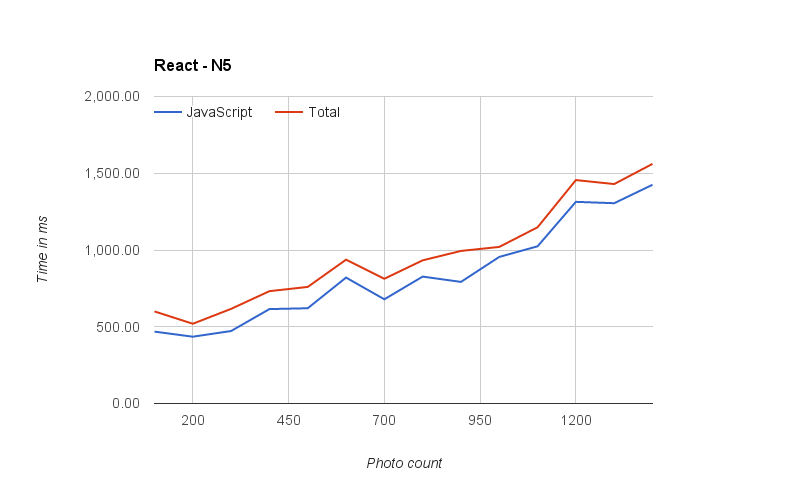

Mobile: React #

Now things get interesting. Let's repeat the React test, but this time on a mobile device: my Nexus 5.

The user ends up with a completely non-responsive UI.

This chart worries me a great deal.

The DOM manipulation time is again pretty steady (since the red line tracks the blue line pretty closely), but the base value is ~500ms for 100 pictures, and it goes up and up and up. By the time you're diffing a tree with 1,200 Flickr photos (a good amount of DOM at 6,000 elements) the main thread has locked up to the tune of around 1.5 seconds. The net result: the user ends up with a completely non-responsive UI. Sure, I got good ergonomics building the thing, but the cost to my users would be way too high.

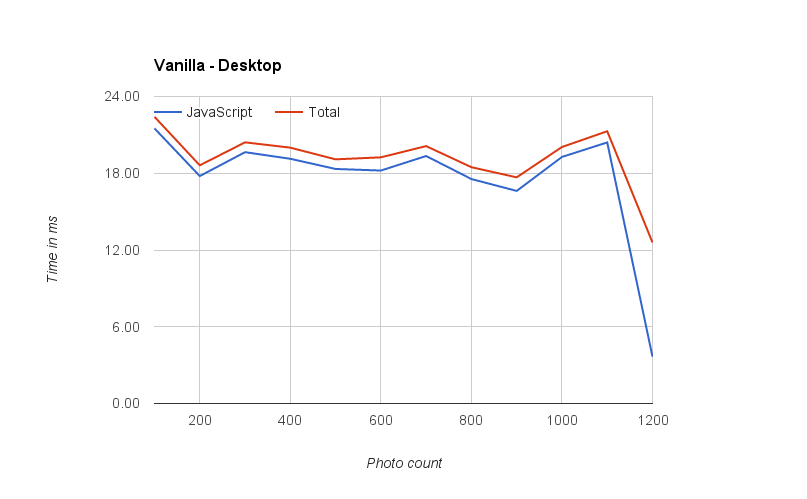

Desktop: Vanilla #

Now let's have a look at the Vanilla approach. Here I'm using my own JavaScript to create a fragment for the new images, the DOM for the new images, and to skip images that already exist.

The workload here remains pretty steady. Depending on how you look at it, it's somewhere between 1/2 to 1/3 the cost of the same thing in React. It seems the majority of the JavaScript time is going into creating the elements and parsing any HTML generated. Since each batch of photos is the same size, the CPU load for each is roughly the same. There are no trees to compare, like we see in React.

As above, the DOM manipulation time remains relatively steady throughout, at around ~2ms. It's probably so light because my MacBook Pro has enough CPU to throw at the problem.

I have no idea what's happened at the end. Either V8 optimized the JS, or there was some kind of reporting error.

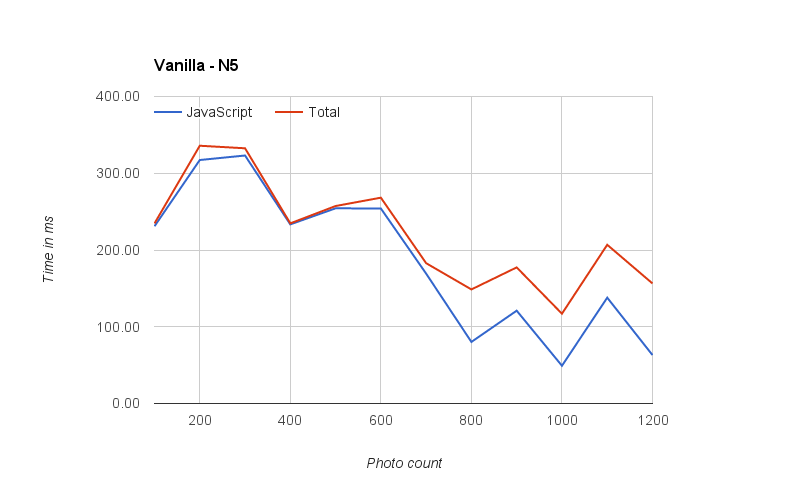

Mobile: Vanilla #

Since it's getting faster with each run I can only conclude that V8 is being a smartypants and optimizing things on the go.

This chart is a bit of a bamboozler, to be honest. Since it's getting faster with each run I can only conclude that V8 is being a smartypants and optimizing things on the go, ruining a perfectly good test. It's interesting (and good!) that it seems able to do that with my plain old Vanilla JS, but it doesn't seem to get as far with React. That makes sense since my code is super simple, and React, very necessarily, is not.

However, we can still see that the workload is in the 80-335ms range, which is way higher than I would personally like for work of this kind. I reckon you could work around it somewhat by attaching fewer elements on each pass, and keeping the user updated on your progress. With the Vanilla approach that's perfectly plausible.

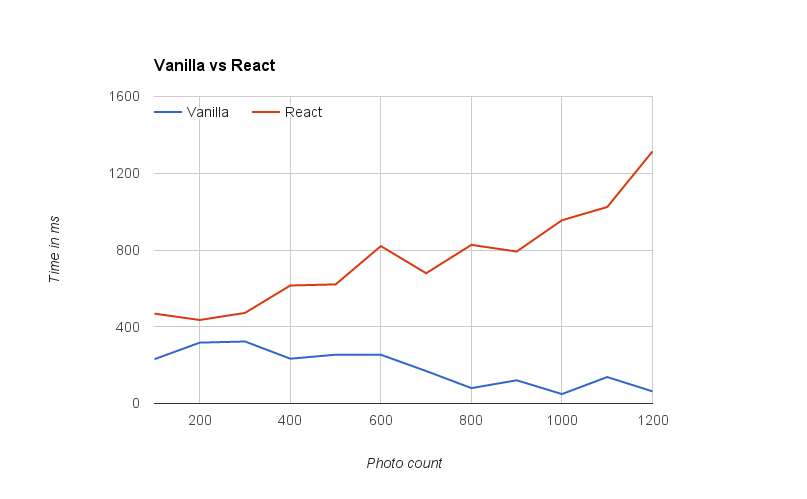

Bonus round: Vanilla vs React #

Finally, I want to put up the numbers (just the JavaScript ones) for React alongside the Vanilla code on the Nexus 5:

For mobile there’s a remarkable cost to using React over not doing so, and the cost is so high as to be reasonably prohibitive.

This, at least as far as this test goes, is pretty much the summary. For mobile there's a remarkable cost to using React over not doing so, and the cost is so high as to be reasonably prohibitive.

Conclusions #

Was this a fair test? I imagine some folks will feel that it isn't. I can see that: I've only tested how well React copes with increasing tree sizes. At the lower end of the test, however, there were only ~500 DOM elements in the component, which shouldn't be considered preposterously large. One of the claims of React is that it's the DOM that is slow, not JavaScript, so perhaps this test is fair after all. This kind of component is very common, and I doubt most development teams would say "we're going to use React for everything apart from these components here", but maybe I'm wrong.

Here are my final thoughts:

- React has lovely ergonomics. Writing JSX and React was a lot of fun, and it's always lovely when you don't have to think too deeply about the edge cases of your code. In that respect, React is brilliant: it's going to catch minor changes all over your tree that you could well have missed.

- React has significant costs, especially on mobile. React has a lot of computational work required to do all of its checks. On mobile the cost is far higher than I think is reasonable.

- Always, always, always test. For your app React may well be an epic win. I know there are many who love it, and I can see why. Just be sure to profile (if you don't know how, I have a free Udacity course you could take) to check the impact on your project! It could be that you have the pathologically bad case which is ill-suited the the tool you're using.

It seems to me that developer ergonomics should be less important than our users' needs.

What's really at stake here, to my mind at least, are the performance benefits (read: user experience) and the developer ergonomics. React is very pleasant for developers to use, but at what cost to the user? It seems to me that developer ergonomics should be less important than our users' needs, as painful as that can be for us developers. Despite the claims, React does seem to have significant performance implications, at least under certain circumstances.

I really enjoyed using React, but I wouldn't personally use it on an app I'm building; I just don't think it would be fast enough.

Other bits and pieces #

Thanks to Pascal Hartig for reviewing my React code and pointing out where I was being an utter noob, to Jake Archibald for providing Flickr API code, and to Addy Osmani for proof-reading.

Some questions you may or may not have:

- How is it on [insert browser here]? I don't know. I do know that we should test it and find out, and I wouldn't pretend that my testing here covers every eventuality.

- Is the source code available? Not yet. Code I write at Google isn't automatically OSS, but if there's significant interest (let me know via Twitter) I will try and get something sorted.

- Did you use keys? React does better when it knows it can skip stuff. Yes, I thought it was ace for telling me that in the console as a warning. I added the keys so it could skip right past the photos it already had. There are probably other optimizations to be made, though. There always are.

- Did you set

shouldComponentUpdateto false? Yeah that would definitely improve matters here, unless I need to update something like a "last updated" message on a per-photo basis. It seems to then become a case of planning your components for React, which is fair enough, but then I feel like that wouldn't be any different if you were planning for Vanilla DOM updates, either. It's the same thing: you always need to plan for your architecture. - Does the React team even care? Yes, I think they do. From the recent meeting notes, they seem to care a lot about JS perf and React. I'm always hopeful for the future of anything when people care about performance and, by extension, user experience.