I have a reputation, apparently #

Turns out I'm known (at least in Developer Relations at Google, I am) for disliking anything that looks like a framework, smells like a framework, or sounds like a framework. I don't like Inversion of Control; I want code to play by my rules, not force me to play by its.

In a framework, unlike in libraries or normal user applications, the overall program's flow of control is not dictated by the caller, but by the framework

I don't like Inversion of Control; I want code to play by my rules, not force me to play by its.

I'll be blunt about my feelings on Polymer 0.5, which I considered a framework: it gave me all the wrong feels. It tried to do so much, and in one go, and it felt like eating a 5 course dinner when all you wanted was a salad. After all, there's no hard requirement to make your own elements. Semantic markup does just fine, and it's hard to beat the browser at components that its engineers have had years to refine.

There was a scent of "pop this script in the head, and that includes the polyfill as well, oh and while you're here just hide everything in until all your components have bootstrapped because FOUC." All of which made me uncomfortable. Lazy-loading, non-blocking, progressively enhancing pages are a core strength of the web. We need to make use of that, not get in the way of it.

It was, in many ways, unfair to judge Polymer so harshly at that stage, because it was labeled as 0.5, not 1.0. Nobody said it was production ready; it was still a work-in-progress!

Ohai Polymer 1.0! #

At Google I/O this year we announced that Polymer is now at 1.0, and I knew from 0.8 and 0.9 that Polymer was far smaller. It was looking very promising. I have something of a policy of giving 1.0 releases a decent go (I did the same with Dart), so I decided it was high time to see if I could get a pattern that preserves the best of the web (progressively enhanced and non-blocking) with the future-facing goodies of Web Components and Polymer.

Lazy-loading, non-blocking, progressively enhancing pages are a core strength of the web. We need to make use of that.

Here's what I reckon is worth knowing for Polymer 1.0:

- It doesn't include any polyfills. That's good, because I reckon we only want to get the polyfill if we absolutely need to, not by default. We will need it for any browser that doesn't support Web Components (so Chrome is the only exemption here), but hopefully more browsers will support Web Components, and the need to polyfill will reduce.

- It comes in three tiers. There's micro, mini, and standard. Each builds on the tier beneath it. If you want anything interesting I reckon you're going to need standard, but if you just want a light wrapper around Web Components then it's micro for you! In my case I want some of the standard features, so that's what I grabbed.

- Elements aren't included. That's also good, because if I want to include Material Design elements (or any others for that matter) I would want to opt into that. I'm not going to cover the Paper, Platinum, Neon, or Iron elements in this post. That'd be like reviewing a UI kit, and at this point I'm more about using Polymer as a component author. That said, everything I'm saying in this post should still apply.

The first two are changes from 0.5, the last isn't, but the changes are important, because they require far less investment from a developer point-of-view, and they put you back in the driving seat. Less like a framework, more like a library. Less like being given that 5 course dinner, and way more like an awesome componenty buffet. That could totally be a thing.

The performance problem #

The samples and documentation often put the polyfills and HTML imports in the head of the document, both of which are render blocking. They also hide all the elements until they've all fully loaded, at which point they get shown. (You can do that with conveniences like the :unresolved pseudo-class, which will will get removed when an element is upgraded).

The net result of both of these is that the user sees nothing until everything has loaded, but with a bit of judicious reworking, we can get things loading and rendering progressively.

Right, here goes...

Step 1: Inline styles and region your app #

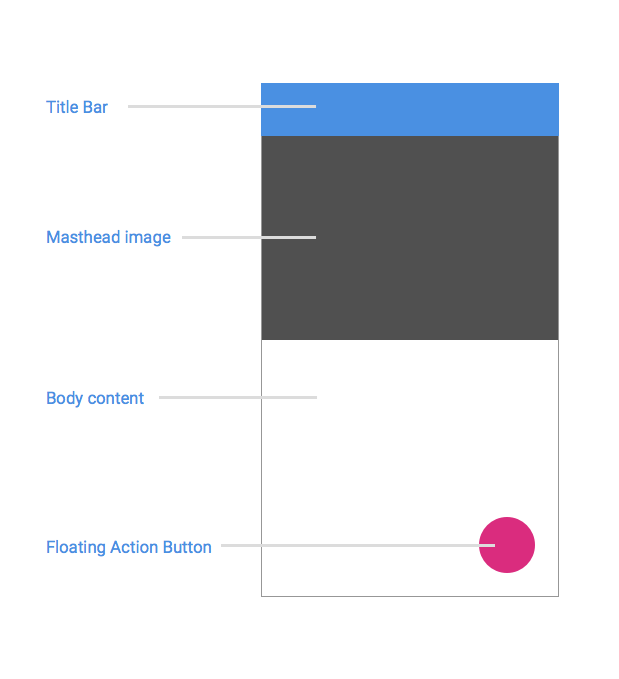

This one is useful, whether or not you use Polymer. Let's say this is the layout of your app:

You probably know the layout of your app ahead of time, so why not just send down placeholder styles in your HTML?

You could wait for all the components to download, then you could assemble them in some kind of JavaScript-based bootstrapping process. Or maybe you'd do it as part of a container <my-app> tag's scoped styling.

My problem with this is that you probably know the layout of your app ahead of time, so why not just send down placeholder styles in your HTML? Why wait and block rendering? It can be as simple as the major regions of your app, and perhaps some coloring info, like toolbars or buttons.

<!doctype html>

<html>

<head>

<meta charset="utf-8">

<meta name="viewport" content="width=device-width">

<title>My app</title>

<style>

<!--

Inline minified placeholder styles

-->

</style>

</head>

<body>

<my-epic-element></my-epic-element>

<script src="/scripts/app.js"></script>

</body>

</html>Doing it this way means the user sees something super quickly as part of the initial response. The elements can then "undo" this styling when they get upgraded as part of the custom elements flow.

You could probably argue that, with self-contained components, it's a fudge to send any styling ahead of time. I'd say that it's worth it for the gain in perceived performance, and that pragmatism should win.

The only thing to be aware of is if you use :unresolved for element styling, which feels like it would be correct, you can end up with something that is FOUC-like, because when that :unresolved pseudo class is removed, your element may or may not have painted. If not, the placeholder styles are removed, then the new styles are applied and you'll see it flash from one to the other. In my case I found it better to handle this swap over in Polymer's attached callback and use a class of my own. I maybe missed a feature of Polymer here that makes this swap easier to handle.

You could probably argue that, with self-contained components, it's a fudge to send any styling ahead of time. I'd say that it's worth it for the gain in perceived performance, and that pragmatism should win. But then I would say that.

Step 2: Conditionally (and async) load the polyfill #

By now we have placeholder styles, so now you need to either go and get your elements, or get the Web Components polyfill and then get your elements.

Glen Maddern made a good little check for that, but it used document.write, an API I dislike with great intensity. But this is modern web, and so we're good for async script loading:

/**

* Conditionally loads webcomponents polyfill if needed.

* Credit: Glen Maddern (geelen on GitHub)

*/

var webComponentsSupported = ('registerElement' in document

&& 'import' in document.createElement('link')

&& 'content' in document.createElement('template'));

if (!webComponentsSupported) {

var wcPoly = document.createElement('script');

wcPoly.src = '/third_party/webcomponents-lite.min.js';

wcPoly.onload = lazyLoadPolymerAndElements;

document.head.appendChild(wcPoly);

} else {

lazyLoadPolymerAndElements();

}

function lazyLoadPolymerAndElements () {

// Set up the element imports.

}Notably I'm only loading the lite version of the polyfill, because Polymer 1.0 includes shady DOM (and will allow you to prefer shadow DOM if it's available in the browser you users are using). The only reason to use the full fat version of the polyfill is if you want to use shadow DOM everywhere or you're not using Polymer. Well I am using Polymer, so that's all good. That also means I only need to check for the absence of custom elements, imports, and templates.

Step 3: Only bundle related elements #

The pattern I've seen a few times is to have a single elements.html file, which goes off and imports all the elements for your app. Pretty gravy once it's been vulcanized and crushed down to a single file. (If you're unfamiliar with the term, vulcanizing it the concat process which flattens your imports.) But what if you have one component that's, like, 2KB, and another that's 300KB? Well, I reckon you want them to load independently, so the small one can get going sooner.

Since we already reserved space for the elements in step 1, it shouldn't matter too much in what order they arrive. If it does matter to your app, then I'd argue they should be bundled together in the vulcanize process.

In the code above there was a lazyLoadPolymerAndElements function, which I use to create all the HTML imports on the fly. Since these are not made by the browser's parser the implication (from reading the HTML Imports spec) is that these should be non-blocking. Sounding gooooood.

function lazyLoadPolymerAndElements() {

// Let's use Shadow DOM if we have it, because awesome.

window.Polymer = window.Polymer || {};

window.Polymer.dom = 'shadow';

var elements = [

'/path/to/bundle/one.html',

'/path/to/bundle/two.html'

];

elements.forEach(function(elementURL) {

var elImport = document.createElement('link');

elImport.rel = 'import';

elImport.href = elementURL;

document.head.appendChild(elImport);

})

}So now we have these bundles of elements (I call them bundles, I dunno what else to call them...) that are loading in lazily. If you need to do imports inside another component you can use the importHref function that comes as part of Polymer.Base. In fact, those utility functions are super handy. I guess that's why they called them utility functions, and not just... you know... functions.

To make sure the bundles are as small as possible I use minifyInline during the build process to uglify the JS and minify the CSS of each, right after vulcanize has done its thing.

Step 4: Don't inline Polymer into your bundles #

Now we have bundles, each one can import Polymer, but we'll want to exclude Polymer itself from each bundle's vulcanize process, so that they won't inline their own copies of it, and so they can all race to get it. When Polymer arrives from its separate import, any of the bundles that have finished downloading can execute and the element(s) they're responsible for can fire up.

To exclude Polymer from the bundles' vulcanize process:

gulp.task('vulcanize-and-minify', function() {

return gulp.src('./dist/elements/**/*.html')

// Vulcanize, inline all the things

// except for Polymer, which we leave alone.

.pipe(vulcanize({

inlineScripts: true,

inlineCss: true,

stripExcludes: false,

excludes: [path.resolve('./dist/third_party/polymer.html')]

}))

// Crush all that JS and CSS and pipe out.

.pipe(minifyInline())

.pipe(gulp.dest('./dist/elements'));

});Meanwhile, just for funsies, I also took polymer.html, vulcanized it separately to the bundles, and ran that through minifyInline as well. In the end I got it down to 27KB for Polymer and 12KB for the polyfills (both gzipped, but this is 2015 so I dunno why I mention that).

Page Load Performance #

To summarise the journey so far:

- Use inline styles to reserve app "regions".

- Vulcanize and crush the element bundles (excluding Polymer itself).

- Conditionally and async load the Web Components lite polyfill.

- Async load the element bundles. (This will also set up a race for Polymer itself).

All that leaves us is to check whether it was actually worthwhile. You'd kind of have to hope so, or I'm just going to feel bad about me. Again.

I'm working on a web app which makes use of this approach. It's a small utility app, but it has three custom components. (Hopefully I'll get it finished up and launched soon so you can see all this code in one place!)

With my web app in tow, I ran two tests:

- Unoptimized. I put the polyfill and HTML imports in the head (which is render blocking), and kept the styling in a separate CSS file.

- Optimized. I did everything I just outlined.

I ran everything on WebPageTest with a Motorola G running Chrome on a 3G connection. It was connecting to HTTPS, so there's a good amount of handshaking that goes into an SSL connection that skews timing. Even so, the results were pretty interesting:

- Speed Index: Unoptimized: 4,848, Optimized: 2,895. Saving: ~40%.

- Start Render: Unoptimized: 4.477s, Optimized: 2.366s. Saving: ~47%.

- Load Time: Unoptimized: 4.065s, Optimized: 3.836s. Saving: ~6%. This is pretty much the same for both tests. We're loading pretty much the same stuff, just with a different "weighting", so that's kind of expected.

You should take these numbers with a pinch of salt, because this is just one round of tests on one app, but for what it's worth Jakey Vegas seems to be seeing the same kinds of benefits by taking a similar approach (in non-Polymer land).

If I needed to I could have also included some placeholder JavaScript to handle early interactions before the app is ready. Again, you can just have the element undo it on upgrade. (If it's someone else's component, then I guess you'd have to do something smarterer here, but it's still a good idea.)

Rendering Performance #

For rendering performance, again this is just one data point, and I'll keep it brief, but I'm barely seeing Polymer show up in my timeline recordings.

The big caveat here is that I'm not using some of the supposedly "costlier features" like data binding, so you should check your own apps and stress test it. But you don't have to use any feature in Polymer if you don't want to, and at 27KB it's not like having it available to you is significant bloat. That said, if I had one wish today it would be to have a custom Polymer builder (or tree shaker) a bit like the Mootools and Modernizr builders. But it's no deal breaker as things are.

Paul ♥'z Polymer #

The reason I really like Polymer 1.0 is that it gets out of my way, but it does give me a lot of ergonomic benefits.

The reason I really like Web Components is that they help me embrace good practices. Sure I can do stuff with sections, divs, paragraphs, whatever, and I can fire my own events and achieve the same visual ends, but Web Components seem to bring out better code from me. (I feel the same about a bunch of ES6 features, as it happens.) It's not like it's waving a magic wand (I can still do bad things, obv), and yes it's more work to make a component than not, but anything worth doing is worth doing properly aye-emm-ohh.

The reason I really like Polymer 1.0 is that it gets out of my way, but it does give me a lot of ergonomic benefits. That's the kind of working relationship I like having with my code. Writing components is fine, but with Polymer it's a breeze. I also seem to be able to do it performantly, thanks to the rewrite between versions 0.5 and 1.0.

So I guess you can say Polymer and I are now, finally, firm friends.