When paint meets image #

When Chrome has been through the process of style calculations and layout, it must then rasterize the pixels for your page. In Chrome the rasterizer that does all the work is called Skia, and it takes a list of draw calls. Those draw calls are created by looking at the styles of the visible elements in your page, some of which will be images (or will use images as backgrounds.)

Whenever Skia hits one of the draw calls that deals with an image it has to go off and get the encoded image (it came down the wire as a JPEG, PNG, WebP or GIF most likely) and decode it to a bitmap. It’s also probably going to have to resize it. This happens inline to all the other draw calls that need to take place, so if the decoding or resizing takes a long time you will see periods of unresponsiveness or checkerboarding in your sites and applications, which is undesirable to say the least.

So I had the idea of seeing if I could beat the browser at its own game. The question I was asking myself was: is there any way I as the developer can be in control of image decoding and resizing? What are the trade-offs if I go offroad and try and do something that the browser already does for me automatically?

The question I was asking myself was: is there any way I as the developer can be in control of image decoding and resizing?

But let’s take a proper look at the problem we’re facing.

Decoding and Resizing #

Whenever we scroll or interact with the page a whole series of complex action takes place under the hood, and the thing we care about the most is that Chrome may end up deciding that it has to paint a new area of the page.

When this happens and painting involves an image, Chrome retrieves the encoded contents of the image file and decodes it from its original JPEG, GIF, PNG or WebP format to a bitmap in memory. Interestingly you can decode at a non-natural size for most algorithms, i.e. bigger or smaller than the size at which the image was saved. But many times resizing is necessary, especially when an image is used in responsive design and set to a percentage of its container element, or when the user pinches and zooms.

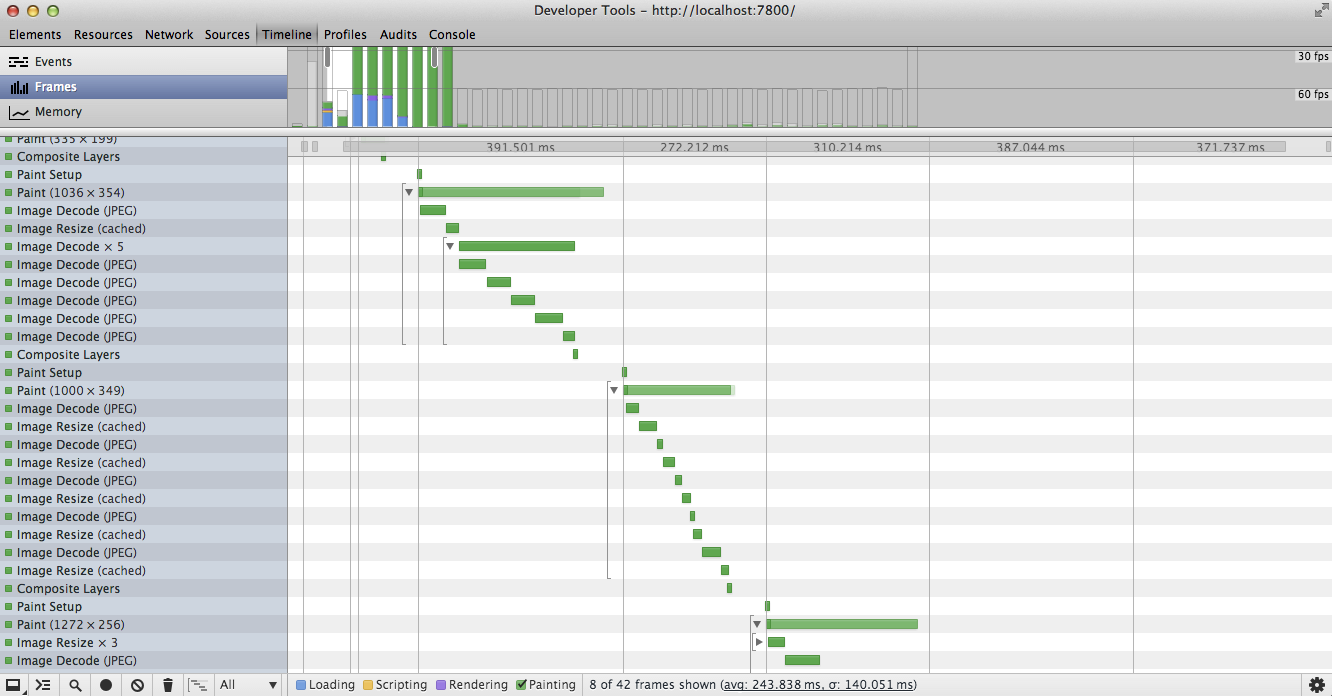

Decoding and resizing are both expensive operations. If rasterization requires several images to be decoded or resized you may well see huge spikes in the time it takes for a frame to complete.

Chrome tries to reduce the work by caching the decoded and resized versions of images. The specifics of what gets cached - the decoded image, resized forms of the image - and how big the cache is get complicated, as it varies by the internal architecture (let’s call those single-threaded or multi-threaded painting) that Chrome is using.

Whether or not you see jank or checkerboarding while a large image is being decoded depends on the architecture Chrome is running in (and that’s not something that you choose as a developer), so let’s take a brief look at them and see how it affects things.

Single-threaded painting #

In this mode painting is handled by the main thread of the page, and is the approach primarily used on today’s desktop version of Chrome.

Since rasterization and, by extension, image decoding and resizing, takes place on the main thread, all other activity on the main thread is blocked. All of this ends up feeling to your users like the page judders a lot, or worse, that it locked up for a long period of time.

If you’re making something image heavy like a gallery, or just something with a lot of images appearing on screen at once you may well feel the problem more acutely.

Multi-threaded painting #

In this mode painting is handled in a different thread entirely, which means that the main thread is free to carry on running JavaScript, handling layout and style calculations. This is the mode used on Android. The upside of this approach is that scrolling, JavaScript and the like can all happen while painting takes place.

The downside is that the rasterization calls are still executed as a sequential list, even if they are handled by a separate thread, and if we have to pause to decode and resize an image then no other painting - like for non-image elements - is going to take place. Image handling is still one rasterization job in the list and if an image takes a long time to decode or resize you will end up seeing checkerboards until Chrome reaches the end of the list and rasterization is complete.

So that one weird trick you mentioned... #

Have you ever browsed the web with images switched off? Sure everything looks worse because you don’t have images on, but you will quickly see how much of an effect images have on the runtime performance of your pages.

Have you ever browsed the web with images switched off? Sure everything looks worse because you don’t have images on, but you will quickly see how much of an effect images have on the runtime performance of your pages.

What if, instead of letting the browser handle decoding, we did it ourselves, in JavaScript and outside of the normal rasterization flow? Perhaps if we do that we can isolate the image decodes and resizes, leaving Chrome to rasterize the rest of the elements and not be blocked on handling images. That should mean that we can interact with the page and scroll, and hopefully maintain a silky smooth 60fps at all times.

On the downside we’re about to start handling images ourselves, which is something that Chrome normally does for us automatically, and that should be a huge red flag to us. On the upside, if Chrome isn’t blocked on image handling, we may well be able to avoid that unsightly checkerboarding and jank.

Going with JavaScript for image decoding, our approach should look like this:

- Create a pool of Web Workers.

- Take the images that we’re interested in having painted and put placeholders into the DOM.

- Pass the url of the images to a worker from the pool for decoding.

- Have the worker download the image as a binary file using an XHR and then decode it using a JavaScript-based decoder.

- Pass the decoded bitmap image data back to the main thread. (With transferables this is nice and fast.)

- Use the bitmap image data to fill in a

<canvas>element at the correct dimensions. - Replace the placeholders with the

<canvas>elements.

The thing to notice is that when you’re scrolling or interacting you’ll see that the page remains smooth when you’re using workers, even when the images are very large. In fact, depending on the number of workers you have, you can often maintain 60fps!

You may well be wondering how many workers you should use, but it really depends on the platform you’re running on and how much CPU time is available. This is one risk amongst many of doing something like this: a lot of the heuristics that Chrome has available for figuring out the amount of resources to put to something like this are not going to be surfaced through JavaScript to you, so at best you’re going to be guessing.

Finally, I’m making use of the excellent jpgjs library, but you would need to have the right decoder for the images you’re working with.

The benefits and costs #

With this approach we get a few immediate benefits:

- We get to decide how many workers are used for decoding.

- We get to decide if and when the images are decoded and painted.

- We know when an image has decoded and are able to fade it in smoothly.

On the downside we now have a few headaches:

- This works today, but if image handling changes and improves significantly you’re locked into your homegrown solution which may well be suboptimal.

- We have to manually decide the number of workers in our pool. That was, of course, a benefit, but as I mentioned earlier, the lack of heuristics means that you have control, yes, but you are operating without insight.

- We are now keeping the image data in the JavaScript heap, so we have to decide if and when we might want to purge that information. The last thing you want is to keep adding image data without ever releasing it!

- We need to have a process for deciding when an image should be decoded.

Right, but isn’t using JavaScript to decode images slower? #

Yes, no doubt it is. But it’s not clear by how much, as that will depend on a number of factors such as the platform in question and what else is being done in the browser at the same time.

In any case, the real trade-off we’re making is to have more control over when and how images are handled, rather than relying on Chrome to do it for us. That, of course, comes with costs and risks, and certainly anything executed in JavaScript (even with V8’s function recompilation) is going to be slower than hand-tuned C++!

In any case, the real trade-off we’re making is to have more control over when and how images are handled, rather than relying on Chrome to do it for us.

With that said, we’re seeing exciting improvements through asm.js and PNaCl where it’s possible to ship exceptionally fast JS, so who knows, maybe one day it’ll be less of an issue.

What about parser lookahead? #

Typically when the browser receives HTML it does a lookahead and starts requesting images and other assets it sees that its going to need. Any approach like that that relies entirely on JavaScript is going to sacrifice that lookahead because we’re adding in elements after the fact. Again, that doesn’t mean it’s to be avoided, but it’s important to know the trade-offs you’re making.

This method of injecting images with JavaScript is likely to be more suitable for anyone who is already constructing portions of the DOM dynamically, and it’s far less suitable when you’re simply serving static content on the first request to the server.

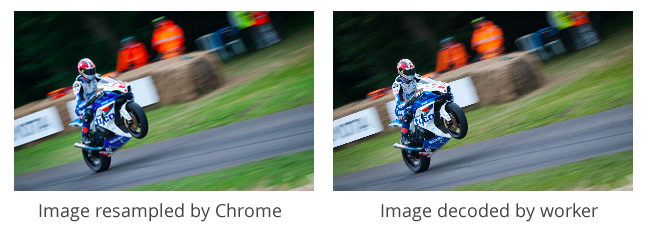

Hey, the images looks a bit jaggy when the worker decodes them! #

Yes, they do in the demo. This is because the decoding and resizing is less sophisticated than in Chrome. Chrome uses Lanczos resampling to make sure that images displayed at non-natural sizes, i.e. bigger or smaller than the original, look as awesome as possible. In my demo I’m simply decoding to the final display size, which isn’t as good.

You can see in the side-by-side comparison image below particularly around the white advert on the side of the track. You will need to click on the image to see the full size version showing all the decoding warts!

There are things one could look to do: implement resampling in the worker, or decoding at a larger size then scale the canvas down using CSS. In both cases there is an additional computational cost, so you be sure there’s a significant improvement before committing to it.

Every worker requires CPU time, and on a constrained device like mobiles or tablets that is a precious resource you don’t want to exhaust.

Performance tuning #

As I mentioned before you need to manually control the number of workers you have on the go at once. Every worker requires CPU time, and on a constrained device like mobiles or tablets that is a precious resource you don’t want to exhaust.

Beyond the number of workers you also want to have a tactic for when you even request the image decode work to take place. In Chrome decoding and resizing happen lazily and as a dependency for the paint, but since we’ve just gone offroad we have to handle things ourselves. What you choose to do is down to the application you’re building, but much as you don’t want to just keep the image data around forever, so you will also want to be sure you’re only having the workers process images when absolutely necessary.

Why doesn’t the browser do this for me automatically? #

Interestingly there has been a discussion on www-style on the W3C mailing lists about a display: optional CSS property that would allow the browser to deprioritize the painting of certain elements and deal with them separately as time allows. That would mean, for an image-heavy application like a gallery, that the rest of the page can be rasterized (hopefully quickly), and that the images will be handled separately and show up whenever they’ve decoded and resized. Essentially it would make native to the browser the behavior I’ve hacked together in my demo.

So far there hasn’t been any conclusion reached, but if you’re interested in have contributing and giving the idea some support you totally should!

Conclusion #

Taking control of image handling from the browser is without doubt an extreme move. As always you should regard performance as a feature, and one as advanced as this should be weighed against all the additional code that will need to be written and then, more importantly, maintained.

Decoding images in JavaScript is undoubtedly slower than decoding in C++, but we gain control, and since the image handling is outside of the normal rasterization path, we can proceed with rasterizing anything that isn’t an image. Handling images yourself may be the difference between having a janky, juddery app and a super slick, high performance one, but it comes with risks, so take care!